I felt honored to have been invited to facilitate the Test Lab at STAREAST. Being my first Lab at this particular conference, I was lucky to have Bart Knaack, James Lyndsay and Wade Wachs to ramp me up before the conference. They told me about their experiences with the Lab at STAREAST and gave me valuable insights from their personal kit of lessons learned. Their input helped me change the regular approach I had running other Labs at EuroSTAR and BTD. Once again, context wins over pre-established “how to”s. This is how I got to focus the Lab experiments on bug logging, rather than the usual multi-focus big range of experiments that I described in more detail in a previous blog post about the EuroSTAR Lab.

STAREAST is one of the three STAR conferences organized by Techwell: STAREAST, STARWEST and STARCANADA. These are among the largest conferences on software testing in the US and include short sessions, half-day and full-day tutorials, multi-day in-depth training, a Leadership Summit and of course, a TestLab.

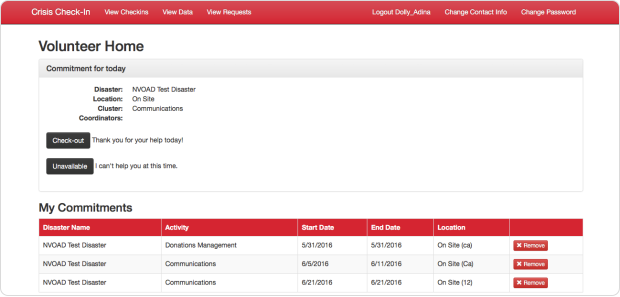

With regards to the Test Lab, Techwell teamed up with the Humanitarian Toolbox to help test their applications that became vital resources for many disaster relief organizations. Crisis Checkin is one of them. Built by volunteer developers, the code is public on github.

You might have already guessed that the focus of the Test Lab was to test the application and log bugs. The stakeholders were interested in a recently developed feature and on improving the user experience.

The challenge of shallow testing and duplicate bugs

The Lab started on Tuesday evening and was open until Thursday afternoon. People came into the Lab, we – the Lab Crew – presented them the application under test and the areas of interest to focus on, then they started to test and report bugs. Some people had 10 minutes, others half an hour to spend in the Lab, and few had more time. It’s not hard to predict the problems of such a context and workflow: shallow testing and duplicate bugs. At a certain point everyone was finding the same bug. Spending time to investigate, isolate, write the steps, and then finding out that the bug was already reported, was not only time inefficient but also demoralizing for participants.

Throughout the first two days we tried to minimize these issues by talking with the participants, and managed to reduce a bit the number of duplicates. But the next day I decided to try something else: I encouraged people to review the bugs that were already logged. This seemed to have worked extremely well, and I want to share with you the benefits of this approach.

Photo credit: Jyothi Rangaiah

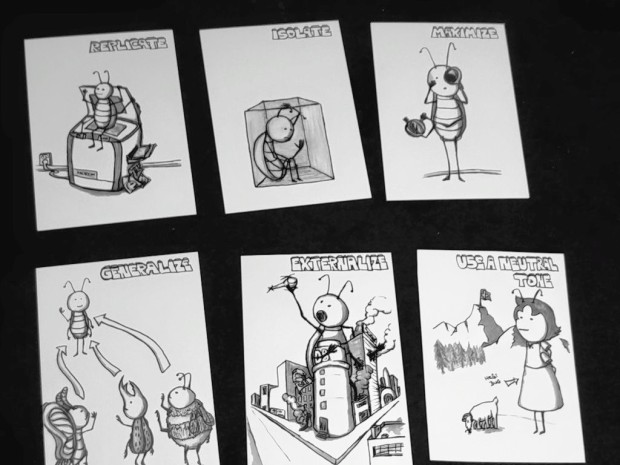

1) People learned something new. In order to review the bugs, we presented them the six approaches to bug reporting: RIMGEN (Replicate, Isolate, Maximize, Generalize, Externalize, Neutral tone) as a checklist to take into account. I came across this mnemonic in the BBST Bug Advocacy course, and I found it so useful that I can’t imagine my bug reporting without it. So, participants learned something new besides applying what they already knew.

2) Thorough testing. While going through the steps of RIMGEN people investigated the bugs more in depth and diversified their test scenarios. Many of the reported bugs were poorly described, and not enough investigated. I also liked the side effect of this approach: having a more precise focus which provided the context for more thorough testing. During the previous days I observed that stating the focus only verbally was not enough, and testers got easily defocused by bugs in other areas of the application. Having the written support of the reported bug in github consolidated the focus keeping.

3) No more duplicates and time efficiency. There was a big amount of time spent on finding bugs that were already reported. This was not efficient neither for HTBox, nor for testers who wanted to contribute and help the volunteers have a better application. This new approach helped us build on top of the existing work rather than starting from scratch each time.

To make this review process more visible and fun, we had a dashboard on a flip-chart where we marked in red the bugs that needed more information. Also, we used Altom’s RIMGEN postcards drew by Levi to emphasize which of the 5 steps needed more investigation. Once we agreed on the step, a card would appear out of my Lab Coat pocket. After the exercise was completed, the card became a souvenir for the people involved.

Photo credit: Jyothi Rangaiah

What I learned from people

At this conference I had the chance to spend time with Jyothi, my Lab colleague. The two of us have very different cultural backgrounds. On one side this brought diversity, but on the other side it made the collaboration a bit more difficult. I had great food for thought from our interactions. For example, during the last conference day I proposed to have a retrospective discussion about what went well in the Lab and what didn’t, such as next time to improve. Jyothi had an interesting point of view, which in her culture is a common concept: she said that things are not intrinsically good or bad, they are neutral. We label things like that by the way we feel. I was puzzled to hear that in this context, and dropped the retrospective idea. I still am puzzled, even now :).

Another insightful experience was playing Paul Holland’s magic card games. He came in the Lab area and proposed Jyothi and I to play a card game. Luckily we had time for that, as the lab officially started only later that day. So, we started with a card game similar to the well known dice game used in the RST course that he, James Bach and Michael Bolton teach. I was very close to getting to the solution. I even verbalized the key aspect of the pattern, but I was not there yet. I would have needed to make a few more connections to make a sense out of it.

This personal experience reminded me of an experiment again with cards called red deck – blue deck. The experiment measures how fast people understand the presented pattern. Its conclusion is that people recognized the pattern eight times faster with their endocrine system by measuring the sweat of the palms, than with their logical system.

Going back to Paul and how he performs his magic tricks (by the way, Paul is in the testing business) an interesting aspect is that he always tells the truth. Moreover, he inserts hints while doing the illusions. Even so, we still didn’t get them :). We seem to have been too absorbed into magic to observe the things we didn’t expect, like in the Monkey Business Illusion.

During the Lab, the session masters were very supportive, especially James Bach. He, Michael Bolton and Jon Bach had a touring session of the HTBox application while identifying and being aware of skills used. There was a projector connected to James’s laptop where we could see in real time the actions he took to test. It was insightful to observe his strategies, interactions with the audience and test notes. You can see here Jon’s and James & Launi’s notes. I love how Jon evaluated the application and highlighted the important aspects in the summary. Also the bugs and issues are very nicely separated.

Another session was held by the lovely Isabel Evans. She’s a great mind and soul, and I was so glad that she offered to have a session in the Lab! This was a very good occasion for people to learn from her expertise on user experience evaluation, especially that HTBox was particularly interested in UX for their application. What I can tell you is that the session started with a drawing of a stickman on a flipchart detailing our senses. After one hour, the output was a long queue of identified bugs and a very engaged audience. You can see Isabel’s slides support here.

Also, working with the conference organizers was a pleasure. Techwell was of great support and made the whole conference experience even more pleasant. I was impressed by how Alison Wade and Jason Hausman were always there to help out with the Lab, and to me they never seemed in a hurry. I am sure that when running a big conference like STAREAST there are always things going on that need the organizers’ constant attention. I appreciated very much their quality of staying calm, and finding time to listen, joke, and give a hand.

All in all, the whole conference atmosphere was great from my perspective. People were supportive with each other, an aspect that I strongly felt as a Test Lab facilitator. I went home with good feelings and good thoughts to digest.

As for you dear reader, because you managed to heroically reach the end of this blog post, I’ll share with you a video to get a taste of the atmosphere at the STAREAST Test Lab 2016.