AI-Assisted Test Automation

A Real-World Refactoring Case Study in Video Surveillance Project

This case study explores how generative AI tools can support testers in refactoring and maintaining automated tests. It reveals the strengths and limitations of using GenAI in a complex, real-world testing environment, showing where human expertise remains irreplaceable and how AI can meaningfully assist in debugging, refactoring, and documentation.

Key achievements

Refactored a legacy test suite using GenAI and Agent-mode tools, improving maintainability and debugging efficiency.

Main challenge

The legacy automation framework was no longer maintained, leading to technical debt and reduced testing efficiency.

AI impact

Copilot accelerated debugging and refactoring with clear, actionable suggestions when guided precisely.

Tech Stack

Language & Frameworks: Java, Spring, TestNG

Tools: VSCode + GitHub Copilot Agent (Claude Sonnet 4), Allure for reports, GitHub for version control

The Context

Test automation for “when we’ll have spare time”

It started the way many of these stories do: with a test automation framework no longer actively maintained. About 60% of the tests were either broken or skipped.

The original authors of the framework had long since left. The only remaining tester on the project was focused on testing new features and periodically running tests from the “regression test suite” without the help of any tool and with no bandwidth to dig into the automation suite. Sound familiar?

This was the setup when we decided to dive in—not just to revive the framework, but to experiment with how far we could go with the help of GenAI tools.

Goals

- Integrate GenAI tools efficiently into the project to support tasks such as maintaining, refactoring, and extending a test automation framework.

- Build and strengthen our fluency in using GenAI tools as part of our regular testing activities

The process: an overview

We decided to focus on the UI Smoketest suite only for this experiment. We outlined a simple but structured process to guide the work:

- Fix the broken tests first – without a green baseline, refactoring would be guesswork.

- Evaluate refactoring needs by ourselves first – identify pain points by looking at the project needs and from a business perspective.

- Prompt GenAI to identify what refactoring is needed and relevant – generate its own list of refactoring suggestions.

- Analyze the suggestions generated by GenAI and see what overlaps with our own list and what does not (learning opportunity for the team).

- Merge and prioritize both lists – use human + AI insights.

- Refactor iteratively with GenAI’s help and with code review done by R.

- Add one new test to understand what the process is for extending the test automation framework with GenAI assistance.

- Hand it off – through pair programming and knowledge transfer to the on-project tester.

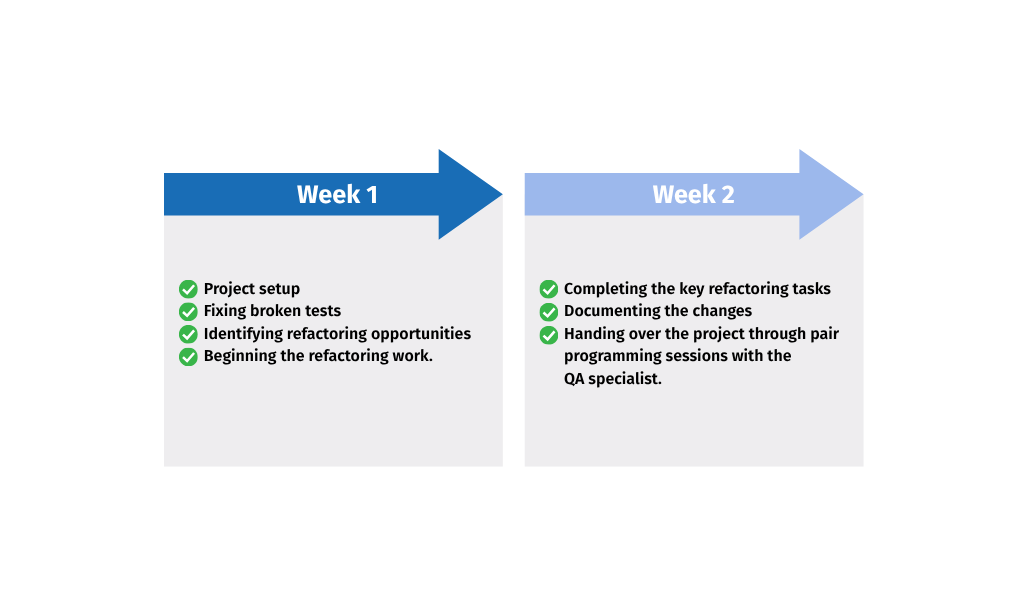

Timeline

We allocated two weeks for this process, with a clear goal of prioritizing the refactoring list so we could tackle the most critical aspects first. Any remaining items were handed over to the QA specialist for continuing refactoring and maintenance work.

The Process: step-by-step walkthrough

The Fixing Phase

Once we had the project running locally—thanks to some fast setup and help from the tester still working on the project—we started by running the UI smoke test suite (we ignored the API and UI regression suites for this experiment). When attempting to first run the tests, we noticed that 60% of the tests were broken or skipped due to issues in @BeforeClass.

After some digging, we agreed to tackle the root cause first: unreliable cleaning flow of the data generated during test runs. We introduced proper lifecycle management for test entities (creation + cleanup), and this stabilized the suite a lot. But applying the changes manually across many classes? Repetitive and time-consuming. So we felt this was the right task for the AI to assist with.

Using GenAI for Fixes

Since we had already fixed a few classes by ourselves, we had great “context” for Copilot to work with. We asked it to refactor the remaining classes based on the patterns we’d established. The results were… surprisingly solid!

But not perfect:

The solution Copilot proposed was to try to create entities with the same emails and names. Since we had multiple tests in a class, we needed to create entities in the @BeforeMethod instead of @BeforeClass. We weren’t expecting this to be something that needed to be specified in the prompt—but still, it was easy to fix with a follow-up prompt.

It broke the test data cleanup. When coming up with a fix, it did not analyze what the impact of this suggested fix would have on other areas, so the suggested fix broke the entity creation in @BeforeMethod area. The lesson learned here: Be explicit about what scope you want AI to focus on.

It struggled with multi-level inheritance—it didn’t traverse far enough down the class chain.

Tip: Tell the AI what to look at—chunk by chunk or across the board. It won’t guess.

The Refactoring Phase

Once we had stable tests, it was time to refactor. We took a dual-pronged approach:

- Human Assessment: Based on code reviews and input from the tester.

- GenAI Assessment: Prompted to analyze the smoke UI suite and suggest improvements.

Here’s what we found:

Human-generated Refactoring List:

1. Use a less-privileged user (safe to run on prod environment) - VERY IMPORTANT

2. Replace deprecated methods/libraries

3. Add screenshot capture for easier debugging

4. Extract duplicated code

5. Group tests better (by functionality/test data)

6. Use relevant names for test classes

Copilot’s List :

1. Massive Code Duplication: `UsersLoginTests.java` contains 5 nearly identical test methods with 80%+ code overlap

2. Inconsistent Login Setup: Each test class implements its own login logic with variations

3. All Tests Commented Out: `UsersLoginTests.java` has all `@Test` annotations commented, making it non-functional

4. Inconsistent Naming: Mixed naming conventions across test classes

5. Repeated Setup Logic: Customer data setup duplicated across multiple classes

6. Hard-coded Values: Magic strings and values scattered throughout tests

7. Inconsistent Assertion Patterns: Mixed use of `softAssertion` vs `assertThat`

* point 3 - tests were commented as they were failing due to a known application bug.

Some of the more context-specific improvements—especially those tied to the actual needs of the project team and tester—were only present in the human-curated list. There are obviously many overlaps in the refactoring suggestions, and Copilot even flagged a few things we’d overlooked.

Refactoring with GenAI

The next step was to actually refactor the tests based on the ideas we had gathered. As the experiment was timeboxed, we had a limited time for refactoring. So we picked 3 items from the merged refactoring suggestions list:

1. Use a less-privileged user (safe to run on prod environment) - High importance & complexity

2. Add screenshot capture for easier debugging - Medium importance & complexity

3. Replace deprecated methods/libraries - Low importance & complexitySome refactorings were straightforward, while others were more complex and required multiple iterations. One of the more complex changes—switching to a less privileged user for the tests—touched on business logic as well, not just code structure. To implement this, we started by gradually updating one of the test classes, using AI only for small coding tasks. Once that class was fully refactored, we used it as “context” for Copilot and asked it to refactor the remaining smoke UI tests in a similar way. Multiple iterations were necessary, and each round helped us refine our understanding of what the test suite really needed.

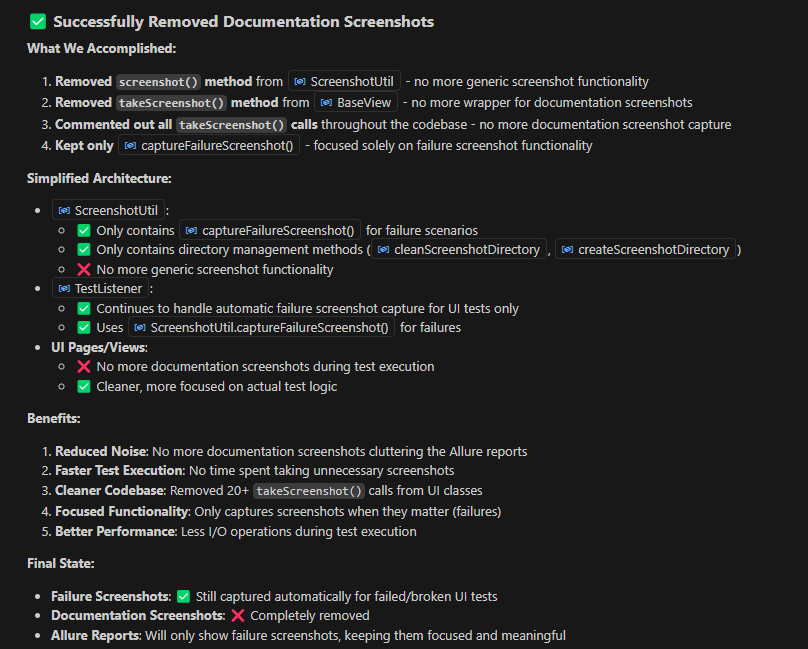

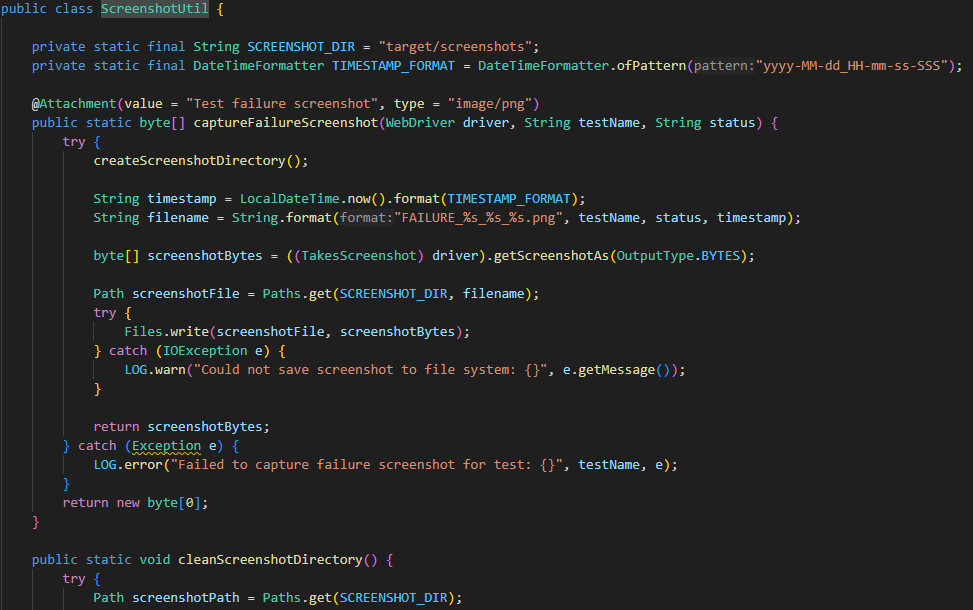

Prompt example:

I am using Allure as reporting tool.

I want all my web smoke tests to have the following capability:

– if a test fails, a screenshot of the application is taken at that point, before any other cleanup/teardown action occurs.

– the screenshot is to be attached to the allure report for the test that failed

– before each suite run, the temporary directory with screenshots should be cleaned

After several iterations and follow-up prompts, Copilot responded:

Debugging with GenAI

Throughout the process, we had to regularly run the tests to see how our changes affected the outcome. One option was to do it manually—trigger the tests and sift through thousands of log lines and error messages that were more or less self-explanatory. The other option was to ask Copilot to handle it. In return, we received a user-friendly summary of the test results, often accompanied by clear explanations and even suggestions for addressing the issues it encountered. That’s definitely a practice that we’ll stick to in future projects.

Handover

We wrapped up with pair programming sessions to transfer everything to the on-project tester so he could maintain and evolve the suite going forward. During the pair programming sessions meant for handover, we started working together on the code duplication issue from the refactoring list.

How the tester described the handover sessions:

“I found the experience of pair programming very helpful, and I am looking forward to continuing this initiative on my own for the maintenance, refactoring, and creation of new automated tests. During handover, the insight about guiding the AI with targeted, well-formulated prompts has shown me what Copilot can output as a solution.”

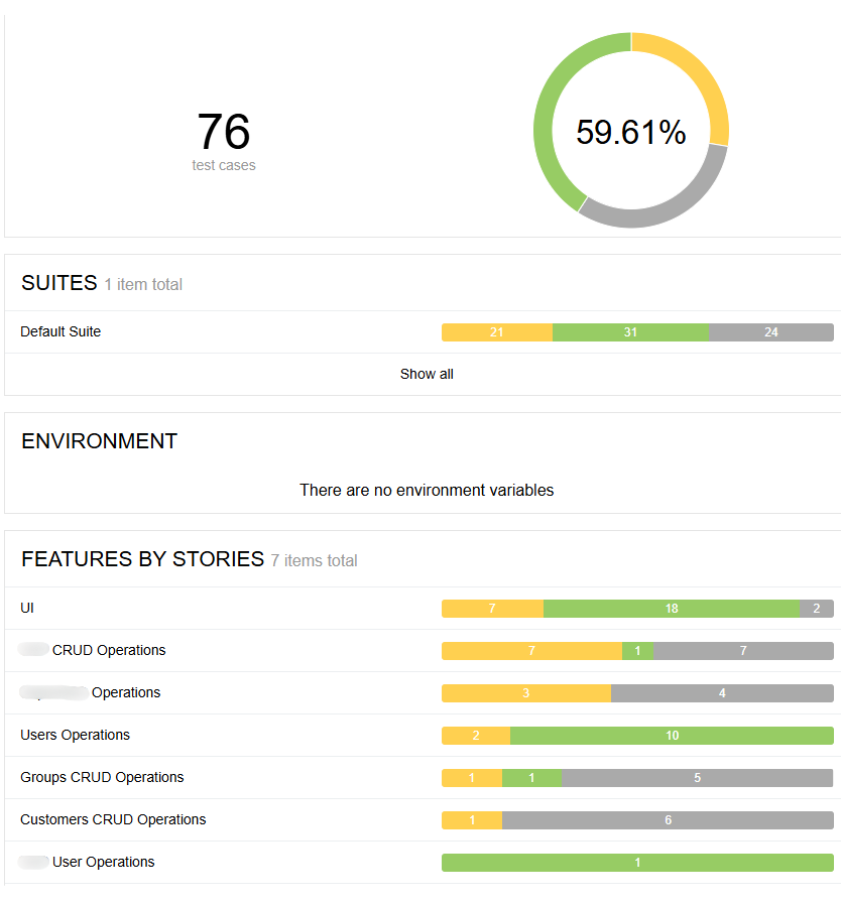

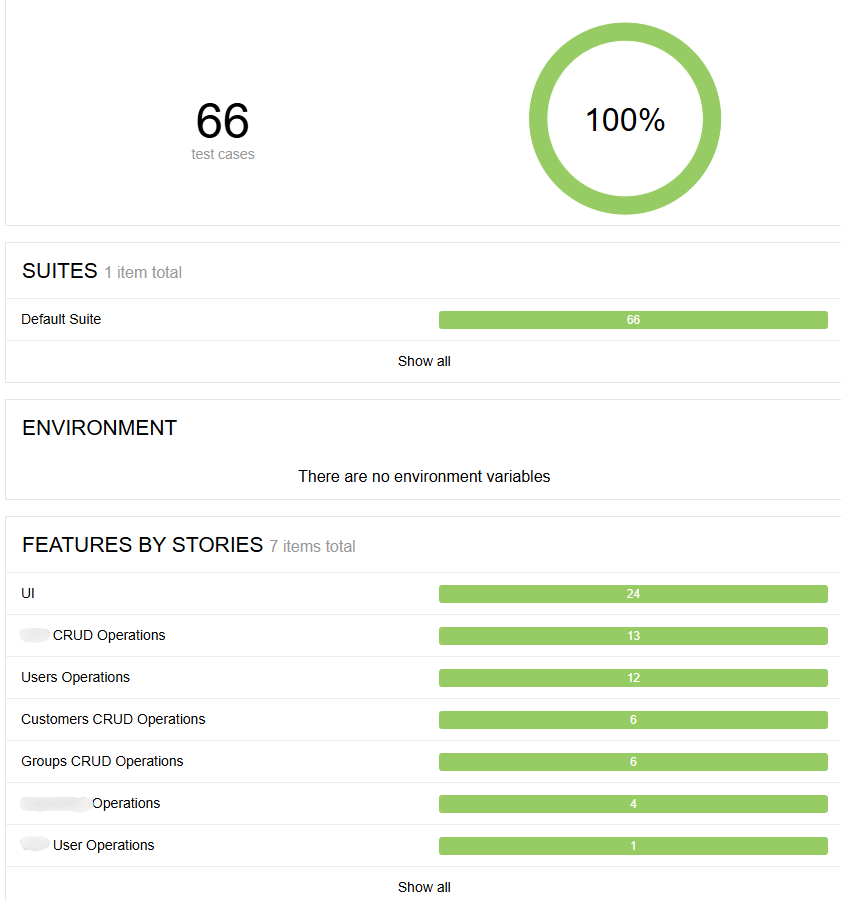

As a quick note, here’s the Allure report after fixing tests and refactoring the 3 items in the merged refactoring list:

Takeaways

GenAI can’t interpret business logic or project-specific needs. The tester’s ability to recognize and act on these aspects remains crucial. In fact, changes related to business logic had some of the highest impact on the overall relevance of the test suite.

There’s no such thing as a one-prompt fix. A well-crafted initial prompt helps, but you’ll still need to evaluate the results, refine your ask, and build up the solution step by step. Iterative prompting is not just helpful—it’s how the process works best.

Let AI assist with debugging—it’s surprisingly good at it. When allowed to run and analyze tests, Copilot (more precisely, Claude) produces clear, explicit summaries of failures, often including actionable suggestions. These insights are especially valuable when debugging gets noisy or time-consuming. Not to mention the time it saves – really appreciated when you’re working under a very tight deadline.

Every GenAI suggestion must be reviewed. Some proposals are impressively accurate, but others can go wildly off track. GenAI can support the work, but it doesn’t own the outcome—you do. Never skip the human review phase. If Copilot starts changing everything at once, pause. When refactoring suggestions become overwhelming, that’s a sign to shift your strategy. Instead of broad prompts, work in smaller, more focused steps. Granularity helps maintain control.

Agent-mode AI offers deeper integration and better context. Unlike standard chat, it can directly edit relevant code sections and uses broader contextual awareness from the IDE. It minimizes human oversight errors—but the need for human review still stands.

Copilot can support a range of goals—if you’re clear about yours. Whether it’s fixing a bug, refactoring code, or understanding a legacy class, it can assist. The real challenge is knowing what outcome you want. Copilot is a strong ally—but it needs direction.

Using Copilot shifts us into a Business Analyst mindset. The responsibility to write clear, comprehensive requirements now falls to us when creating the prompts. Poor prompting leads to poor results, just like vague requirements lead to poor features.

Celebrate your progress—and let AI cheer you on. And it will also boost your ego: “The test ran flawlessly and demonstrates that our refactoring completely resolved the issue while providing excellent traceability through meaningful data set identifiers! ”

Final thoughts

Maintaining and refactoring a test automation framework becomes significantly more achievable with the support of GenAI tools—especially when you’re not the original author of the code. However, the project and business context remain essential.

The most impactful improvements stem from close collaboration with someone who has in-depth knowledge of the system and its testing priorities. So, if you step into this kind of task, make sure to work closely with a tester from the project—someone who understands the testing effort, the product, and what truly matters.

Coding skills and test automation experience are also extremely important, as without a good understanding of the code, there’s no way you can review Copilot’s proposals. In fact, you’ll be lost in the enormous quantity of code it can generate if not properly guided.

Explore further

Want to know more about our experience working on the project? From our testing approach, the process, the roadblocks we encountered along the way, to the solutions we found for this project, our team gathered all these insights in an experience report, a real exposition from behind the scenes.

Check out the blog article on the BBST® Courses website.

Need an effective testing strategy for your product?

We can find one that’s suitable for you!

or call +40 371 426 297